Like its Ivy League peers, Columbia started out as a sleepy institution focused on teaching history and literature to undergraduates — a model of higher education typical of eighteenth-century colleges on both sides of the Atlantic. But its location in New York City, the future industrial hub of America, soon pushed it to broaden its mission. In the early years of the republic, when the city needed physicians, lawyers, and other professionals to serve its rapidly growing population, Columbia expanded its curricula to train them. When, at the dawn of the industrial era, the US needed engineers to build the infrastructure for a modern economy — from bridges and railways to aqueducts and factories — the University established one of the country's first engineering schools. And when sanitation, infectious disease, labor conditions, and ethnic tensions became defining challenges for cities nationwide, Columbia launched new graduate programs in areas like political science, journalism, public health, and social work.

By the turn of the twentieth century, most Americans’ lives had already been improved in one way or another by Columbians’ ingenuity and idealism. DeWitt Clinton 1786CC, 1826HON, as governor of New York, was the driving force behind the Erie Canal, which opened the interior of the country to trade. Horatio Allen 1823CC, a Columbia-trained engineer, helped to create the nation’s railways, and William Barclay Parsons 1879CC, 1882SEAS, a fellow engineer, designed the original New York City subway system, to name just a few examples.

But even as Columbia alumni were shaping the nation’s infrastructure and public institutions, something even more transformative was happening on campus. As sociology professor and former provost Jonathan R. Cole ’64CC, ’69GSAS writes in his 2009 book The Great American University, Columbia was becoming a new kind of institution — one that didn’t just impart knowledge but generated it. To meet America’s growing demand for scientific, technical, and policy expertise, Columbia and a handful of other US universities began to integrate research into the fabric of their institutions like no schools had ever done before.

The nature of the research they were supporting was distinct too. Whereas the German universities that had until then overseen the world’s most accomplished research programs prioritized theoretical science at the expense of more practical inquiries, Columbia and its US peers pursued a mix of so-called pure science and applied research that aimed to solve real-world problems. So while graduate students at the University of Berlin in 1910 were refining chemical principles and pontificating about Max Weber’s sociological theories, their counterparts at Columbia were just as likely to be testing drinking water and gathering evidence about the living conditions in Lower East Side tenements.

This new American model of higher education would by the mid-twentieth century emerge as the world’s dominant system for producing all types of scientific research. After helping to secure the Allied victory in World War II with breakthroughs like radar, antibiotics, and the atomic bomb, science was newly recognized as a source of national power, and Washington quickly moved to solidify America’s advantage by establishing a permanent system to fund it. From that point onward, the lion’s share of US medical, scientific, engineering, and social-science research would be conducted not at government-run facilities but on university campuses, financially supported by a constellation of federal agencies. The reasoning, Cole writes, was simple: scientists working independently were most likely to generate the novel ideas that would keep Americans safe, drive economic growth, and improve lives around the world.

Over the past century, Columbia has consistently ranked among the top US universities in scientific productivity. In the past five years alone, nearly two thousand inventions have emerged from Columbia’s labs, fueling the nation’s information-based economy. Columbia’s research priorities are always evolving, shaped by the challenges and tools of each era. But the biggest breakthroughs have stood the test of time and only seem more important with each passing year. What could be next?

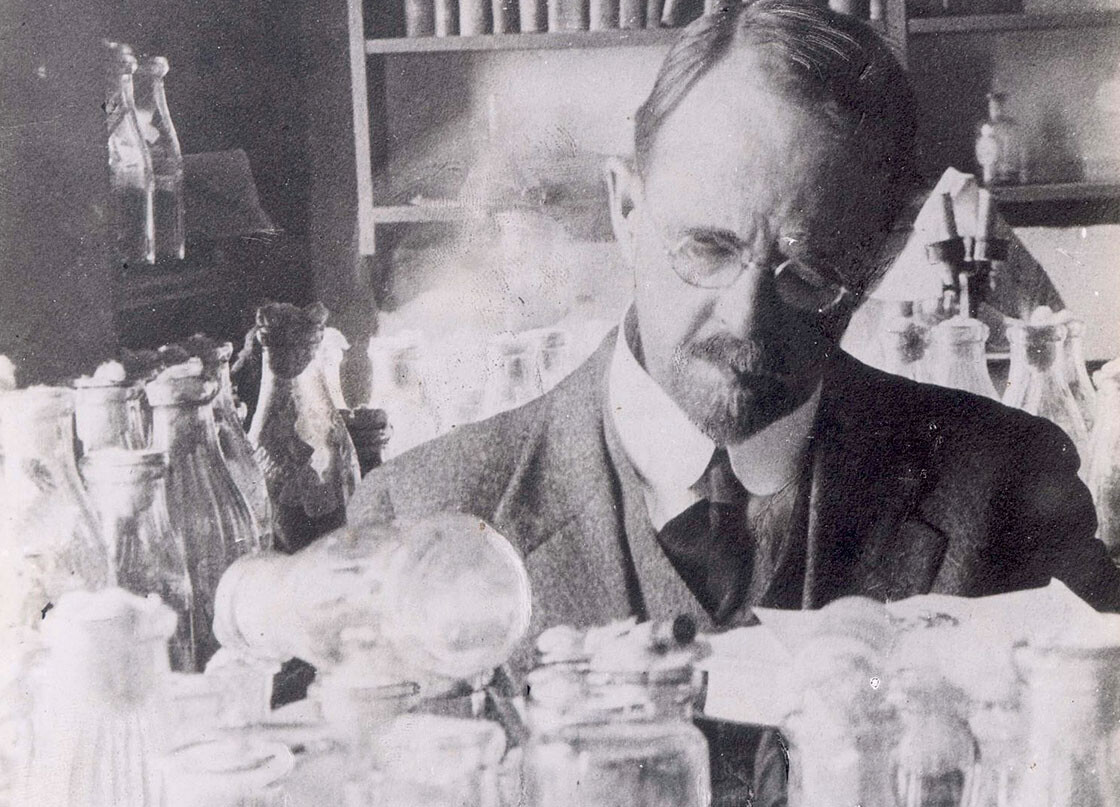

Thomas Hunt Morgan began studying fruit flies in 1907, the scientific community was abuzz with speculation that cells might contain instructional codes capable of guiding an organism’s development. But no one knew where those codes resided, what physical form they might take, or how to go about finding them. In a series of ingenious experiments, Morgan and his colleagues traced inherited traits like eye color, wing shape, and sex to specific locations on chromosomes — squiggly, thread-like structures that had been glimpsed inside of cell nuclei decades earlier but whose function had remained a mystery. In doing so, they launched the modern field of genetics.

Over the next few decades, Morgan and his students went on to make many more important discoveries. They determined that genes are arranged on chromosomes like beads on a string, that they can be damaged by radiation, and that mutations can be passed on to offspring. Morgan and several of his students, including Hermann Muller 1910CC, 1916GSAS and Joshua Lederberg ’44CC, would win Nobel Prizes. Their findings set the course for twentieth-century biology, laying the groundwork for everything from the discovery of DNA’s double-helix structure to the development of lifesaving treatments for cancer and other conditions.

But Morgan’s influence extended beyond biology. Writing in Columbia Magazine, Nobel Prize–winning neuroscientist and University Professor Eric Kandel once described how Morgan’s unconventional approach to running his Schermerhorn Hall lab helped to transform how nearly all scientific research has been done in the United States since. In contrast to the hierarchical, insular German model that had long dominated — in which professors typically kept students at arm’s length — Morgan fostered a collaborative, meritocratic environment that prized creativity and open debate. His approach was eventually adopted throughout Columbia and beyond, helping to entice many of the world’s brightest students to enroll in US universities.

As Morgan’s findings helped to shift “the center of influence in biology” from Europe to the United States, Kandel wrote, his lab’s “fully democratic and egalitarian atmosphere … soon came to characterize the distinctively American atmosphere of university research.”

many scholars gave intellectual cover to white Europeans and Americans who saw themselves as superior to other groups, claiming that intelligence, moral character, and nearly every other aspect of human potential was determined by race and ethnicity.

Franz Boas 1929HON, a cultural anthropologist who taught at Columbia from 1896 to 1936, did more than any other academic to dismantle that myth. Through his empirical studies of Indigenous peoples and immigrant communities in North America, he demonstrated that human behavior is shaped primarily by culture and environment, and that biological differences between groups have no significant bearing on how people think or act. At Columbia, Boas inspired a generation of anthropologists — including Ruth Benedict 1923GSAS, Zora Neale Hurston 1928BC, and Margaret Mead 1923BC, 1928GSAS, ’64HON — who carried forward his vision of research grounded in cultural humility while also expanding the field’s lens to include questions of gender, sexuality, and power. Boas and his students together helped to spearhead what the scholar Charles King called, in his 2019 cultural history Gods of the Upper Air, “the greatest moral battle of our time: the struggle to prove that — despite differences of skin color, gender, ability, or custom — humanity is one undivided thing.”

“If it is now unremarkable … for a gay couple to kiss goodbye on a train platform,” King writes, “for a college student to read the Bhagavad Gita in a Great Books class, for racism to be rejected as both morally bankrupt and self-evidently stupid, and for anyone, regardless of their gender expression, to claim workplaces and boardrooms as fully theirs — if all of these things are not innovations or aspirations but the regular, taken-for-granted way of organizing society, then we have the ideas championed by the Boas circle to thank for it.”

degree at Columbia, Herman Hollerith 1879SEAS, 1890GSAS took a job as a statistician for the 1880 US Census — at the time, the largest data-collection effort in history. The project gathered an unprecedented amount of demographic information on the nation’s fifty million people and took seven years to complete, rendering its hand-sorted results outdated by the time they were published. Watching his colleagues toil away with pen and paper, Hollerith envisioned a better approach: census workers could record data by punching holes into paper cards and then processing the cards en masse, using new machines he would design with electrical contacts and mechanical counters.

Hollerith’s automated punch-card system, which he developed as part of his Columbia PhD dissertation, revolutionized data analysis. The Census Bureau adopted it, enabling the 1890 count to be completed in just three years. Soon insurance companies, railroads, accounting firms, and other businesses interested in sorting large amounts of data were lining up to use the technology, whose electric circuits and binary coding (holes were either punched or not) anticipated modern computing. In 1896, Hollerith founded the Tabulating Machine Company, which, after several mergers and name changes, became the International Business Machines Corporation, or IBM.

of radio, listeners could often expect to hear one thing, no matter where they turned the dial: static. That’s because AM (amplitude modulation) transmission, the reigning technology in the early twentieth century, was highly sensitive to electrical interference from thunderstorms, household appliances, and even the sun. Experts insisted that nothing could be done. Weak, noisy reception, they said, was simply the nature of broadcasting.

Edwin Armstrong 1913SEAS, 1929HON, a Columbia professor of electrical engineering, proved them wrong by dreaming up an entirely different way of encoding sound in electromagnetic waves. Instead of adjusting the height of each wave, which is how AM transmission worked, he found a way to transmit sound by varying the frequency of the waves, or how tightly packed they were. Frequency modulation, or FM, proved to be almost entirely impervious to environmental disturbances, improving sound quality a hundredfold or more.

Armstrong’s FM system, patented in 1933, went on to dominate the airwaves. Other inventions of his, like the superheterodyne receiver, influenced the development of nearly all modern wireless technologies.

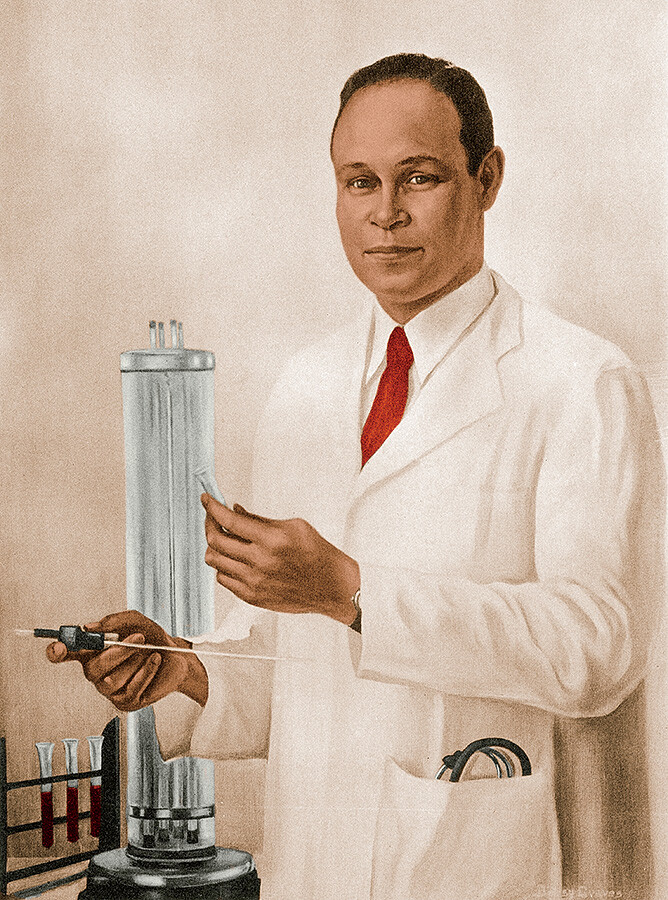

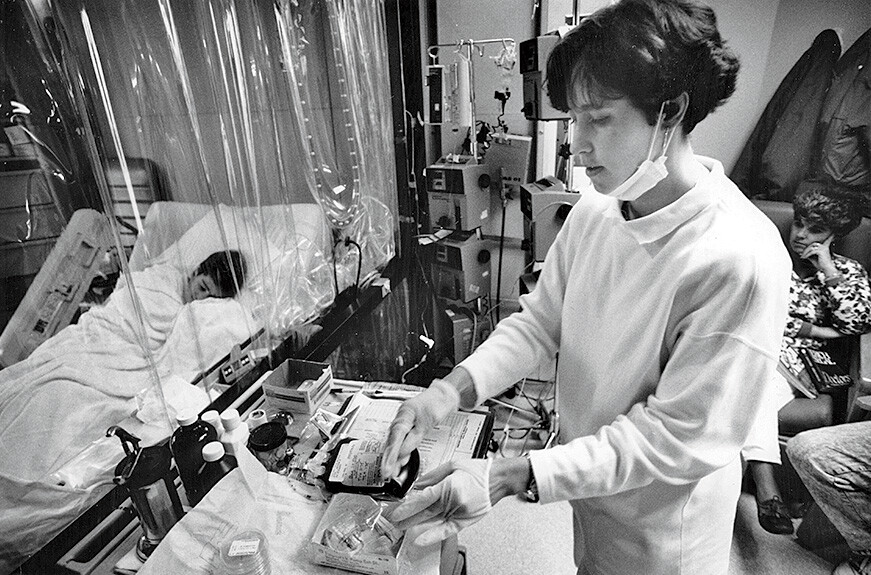

of Americans receive lifesaving blood transfusions after surviving car crashes, workplace accidents, natural disasters, and other catastrophes. The ability to quickly deliver blood when it’s needed can be traced back to Charles R. Drew ’40VPS. As a medical student at Columbia, Drew conducted pioneering research on blood-preservation techniques and developed the first standardized procedures for collecting, storing, and distributing large quantities of blood. Upon finishing his degree, he was appointed to lead a US government effort to collect blood donations for wounded British soldiers and civilians in World War II; in 1941, the American Red Cross tapped him to direct its first national blood-bank program. Drew, who was African-American, eventually resigned that position to protest the organization’s insistence on segregating blood donations by race — an unscientific policy that it finally lifted in 1950. But by then, he had laid the groundwork for America’s entire blood-donating system.

was discovered by accident in 1943, when CUIMC lab researcher Balbina Johnson noticed that a dangerous bacterium that she had cultured from a young girl’s leg wound had been vanquished by another strain commonly found in soil. Working with Columbia surgeon Frank Meleney 1916VPS, Johnson soon purified the benevolent microbe’s disease-fighting compounds to create bacitracin, an antibiotic whose lifesaving potential became evident when the researchers observed it kill certain pathogens that penicillin was powerless against.

Approved by the FDA for topical use in 1948, bacitracin quickly became a staple of Americans’ medicine cabinets, reducing the chances that minor cuts, scrapes, and burns would lead to serious infections. Still widely used today, bacitracin is a key ingredient in ointments like Neosporin. And the soil-dwelling organisms that produce it may yet have more tricks to offer. In 2024, a team of Dutch, German, and British researchers proposed that these microbes' bacteria-killing secretions, if enhanced and made even more potent through genetic reengineering, could defeat many of the drug-resistant bugs now wreaking havoc on human health around the world.

’85HON, a Columbia professor from 1941 until his death in 2003, was arguably the most influential American sociologist of the twentieth century. A brilliant and prolific theorist, he gave the field many of its core concepts, including “role model,” “reference group,” “unintended consequence,” and “deviant behavior.” But it is his idea of the “self-fulfilling prophecy” that has had the broadest impact in academia and beyond.

The notion that our beliefs can bring about the very outcomes we expect is hardly new, of course. Literature is filled with characters, from Oedipus to Macbeth, whose fates are sealed the moment they imagine them. Merton’s genius was to turn this literary motif into a sociological construct, revealing its power to explain why individuals, groups, and institutions often behave in ways that make their assumptions come true.

First articulated in 1948, Merton’s theory has proved to be an unusually versatile analytic device. Scholars have used it to understand how consumer expectations can drive economic booms or busts; how teachers’ perceptions of students’ ability influence their performance; and even how world leaders’ anticipation of conflict can make war inevitable. The beauty of the concept is how it shows that problems often contain the seeds of their own solutions: since behavior follows belief, a change in outlook can improve the outcome.

Consider this approach: study a company’s fundamentals — its past earnings, balance sheet, and tangible assets — and if its stock is trading below those of comparable companies, snap it up.

It may not sound exciting today, but this investment strategy was nothing short of revolutionary when Columbia business professor Benjamin Graham 1914CC introduced it in the 1930s. At the time, most investors assumed that market fluctuations were essentially inscrutable and that the best one could do was follow the herd, barring access to insider information. In his research, Graham demonstrated that investors could consistently beat the market if they examined publicly available financial information closely enough — and if they resisted the temptation to chase overhyped, speculative opportunities.

Graham’s philosophy, which he called “value investing,” eventually came to dominate US financial culture. Columbia became the intellectual heart of the movement, and Graham’s disciple, Warren Buffett ’51BUS, its most prominent champion.

In recent decades, the investment community has periodically embraced riskier approaches — especially during the 1990s and 2010s tech booms, when many startups without solid fundamentals became wildly successful — yet Graham’s strategy of rigorously scrutinizing a company’s intrinsic value remains a cornerstone of modern securities analysis.

Columbia physician E. Donnall Thomas’s last-ditch plan to save terminal leukemia patients sounded almost unthinkable: he would bombard them with such high doses of chemotherapy and radiation that it would obliterate their bone marrow, leaving them unable to produce any new blood cells or fight infection. Then he would implant healthy bone marrow from donors, in the hopes that it would take root and start producing clean, cancer-free blood. His team’s first human trial, launched in 1957 at the Columbia-affiliated Mary Imogene Bassett Hospital in Cooperstown, New York, failed tragically, with all six patients succumbing to infection or graft rejection. Critics called for an end to the research. But Thomas and his team persisted, learning how to match donors and recipients more precisely and to bolster patients’ fragile immune systems. By the 1980s, bone-marrow transplantation had become a standard treatment for many leukemias and other cancers. Today, the procedure saves the lives of tens of thousands of people annually.

physicists of the twentieth century, Isidor Isaac Rabi 1927GSAS, ’68HON, is best known for discovering how to measure the magnetic properties of atoms — a breakthrough that earned him the 1944 Nobel Prize in Physics. The Columbia professor’s methods made possible a host of new technologies, including lasers, magnetic resonance imaging (MRI), atomic clocks used in GPS satellites, and even quantum computing.

Although he preferred the solace of his Pupin Hall laboratory, Rabi also possessed a gift for scientific leadership and played a key role in ushering in a new model of large-scale, collaborative research that would come to be known as “big science.” During World War II, he helped lead America’s all-hands-on-deck efforts to develop radar and the atomic bomb; after the war, he emerged as a scientific statesman of the highest order and devoted himself to championing similarly ambitious projects for peaceful purposes. Most notably, he was the driving force behind the creation of CERN, the Geneva-based research organization that now operates the Large Hadron Collider, the world’s premier facility for particle physics.

To Rabi, CERN was as much a diplomatic enterprise as a research effort, a way of promoting peace by engaging scientists from around the world in shared intellectual pursuits. It succeeded on both fronts, bringing together tens of thousands of physicists from more than one hundred countries on projects that are still probing the fundamental structure of the universe to this day. The collaborative model that Rabi helped pioneer also inspired endeavors like the Human Genome Project, the Hubble Space Telescope, and today’s multi-institutional efforts to combat cancer and climate change.

after attending thousands of births and observing how physicians often struggled to identify newborns who needed urgent care, Columbia anesthesiologist Virginia Apgar ’33VPS developed the first standardized system for evaluating babies’ health. She showed that if doctors quickly measured heart rate, respiratory effort, muscle tone, skin color, and reflexes, they generated a reliable snapshot of an infant’s condition — what became known worldwide as the Apgar score.

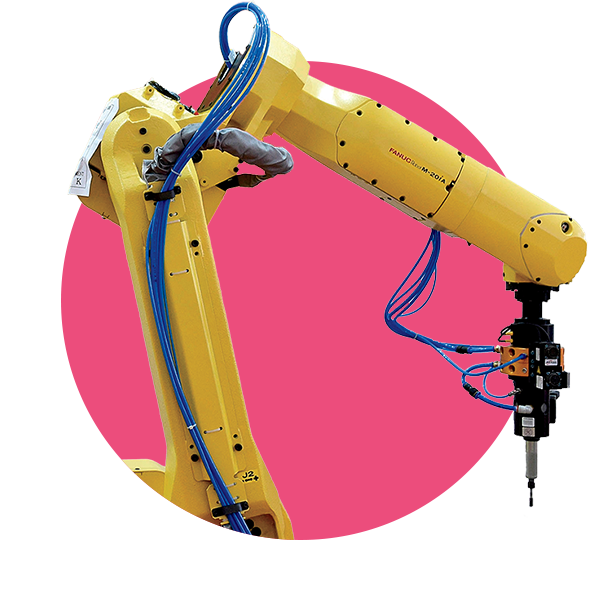

before R2-D2, before the Jetsons’ tin-can housekeeper, Rosey, there was Unimate — the first robot ever to clock in on a factory floor.

The brainchild of Joseph F. Engelberger ’46SEAS, ’49SEAS and his business partner George Devol, Unimate got its start prying hot metal plates out of a die-casting press at a General Motors plant in Trenton in 1961. By the end of the decade, it was performing other dangerous and repetitive tasks at GM, Ford, and Chrysler plants across the country — welding chassis, applying adhesive to windshields, spray-painting car bodies. A spoof résumé produced by Engelberger’s company, Unimation, summed up his robot’s strengths: it could work twenty-four hours a day, welcomed physical and emotional abuse, and was unaffected by heat, cold, fumes, or dust.

Engelberger, who had studied physics and electrical engineering at Columbia, sold Unimation to Westinghouse in 1983. He then consulted for NASA, pushing the idea that unmanned, remote-controlled vehicles could explore other planets. Eventually he turned his attention to developing robots for use in health-care settings; one of his inventions, HelpMate, was until a few years ago still serving meals to patients in hospitals around the world.

“He was years ahead of his time,” said Jeff Burnstein, president of the Association for Advancing Automation, upon Engelberger’s death in 2015. “Early on, he asked the one question that continues to transform the industry: ‘Do you think a robot could do that?’”

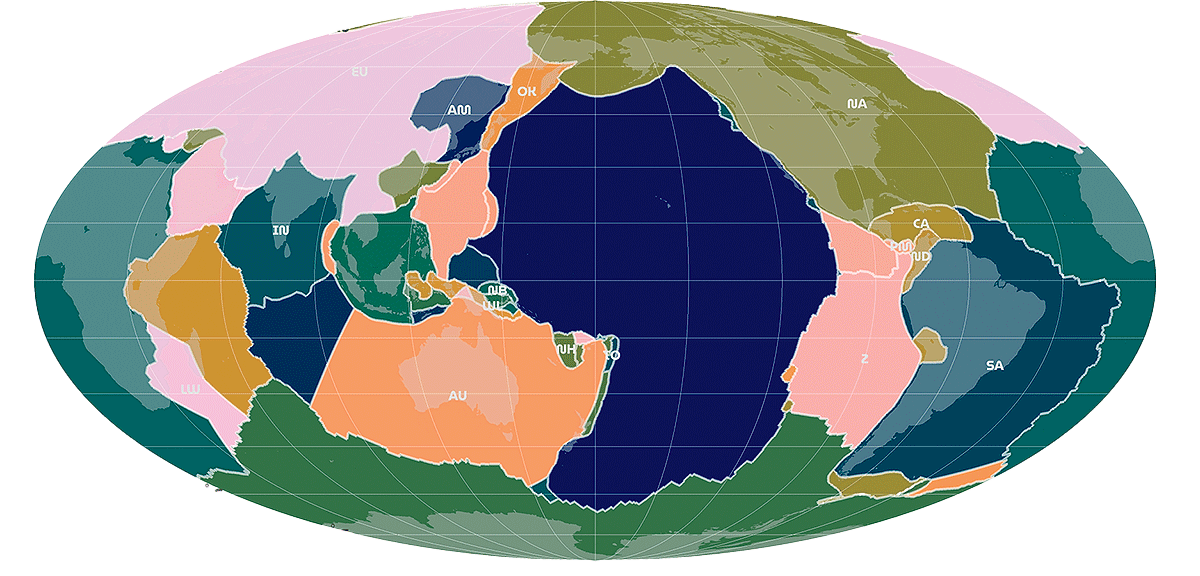

the earth was thought to be solid, fixed, permanent.

Then, in the 1960s, scientists at Columbia’s Lamont-Doherty Earth Observatory used sonar imaging to peer deep beneath the oceans and noticed patterns on the seafloor that made no sense at first. They detected vast underwater mountain ranges, volcanic eruptions at their crests, and odd magnetic signatures in the surrounding rock. After years of analysis, the scientists reached the startling conclusion that the earth’s surface is in constant motion. Specifically, they found that hot molten material is continuously welling up from within the mantle and bursting through at mid-ocean ridges to form new crust. This slow but unrelenting process pushes older crust outward — triggering earthquakes, giving rise to mountains, and over millions of years driving the movements of entire continents.

The Lamont team’s findings provided the first definitive evidence for the theory of plate tectonics, building upon a decades-old, previously dismissed idea that the continents are adrift upon viscous layers of mantle. In doing so, the Columbia scientists — including Marie Tharp, Bruce Heezen ’57GSAS, Walter Pitman ’67GSAS, and Lynn Sykes ’65GSAS, ’18HON — helped usher in a paradigm shift that some have likened to the Copernican revolution. By revealing the tectonic forces that continually reshape the planet’s surface, they gave other scientists the tools to reconstruct the earth’s ancient past with unprecedented clarity, tracing the formation and breakup of the supercontinent Pangaea and charting shifts in ocean currents and atmospheric patterns that drove past ice ages and warm periods. The Columbia team also provided the scientific community a powerful new lens on the present: for the first time, researchers could view modern environmental conditions in the context of the earth’s history, comparing them to earlier eras.

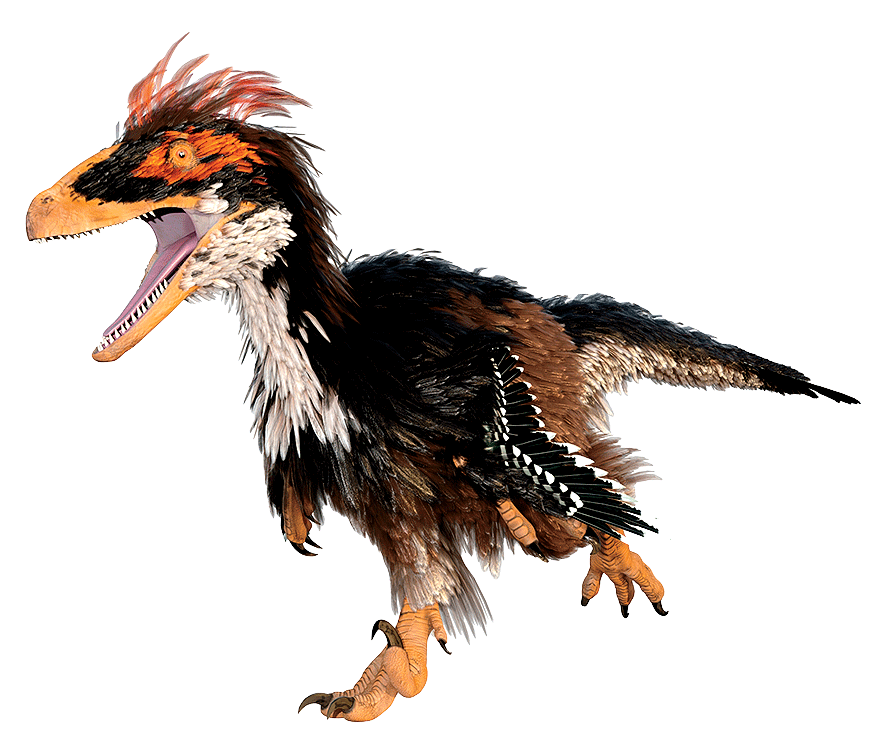

1980 or so, it’s likely that you were taught that dinosaurs were scaly, sluggish, dim-witted reptiles that had all gone extinct. That was before John Ostrom ’60GSAS overturned nearly everything we thought we knew about them. The foremost dinosaur expert of his generation, Ostrom spent more than six decades arguing that other paleontologists had misclassified dinosaurs as oversized lizards. Despite superficial resemblances to crocodiles and other reptiles, he suggested, many species were probably agile, warm-blooded, and intelligent — more like mammals. Ostrom backed up his ideas with new fossil discoveries and rigorous reinterpretations of existing specimens. His coup de grâce was helping to prove that the birds outside your window represent a living group of dinosaurs descended from theropods like Tyrannosaurus and Velociraptor.

The field of paleontology, although famously slow to embrace new ideas, has in recent years accepted most of Ostrom’s core conclusions. Visit any natural-history museum today and you’re likely to see dinosaurs that look like they stepped right out of one of his papers: they may be depicted socializing, hunting in packs, and sporting vibrant plumes.

the great thinkers agreed on one thing: human consciousness was elusive. If it wasn’t beyond the physical realm entirely, then it was so complex that no scientist could ever hope to explain it.

Eric Kandel wasn’t so sure. As a young psychiatrist in the 1960s, he believed that advances in neurology would soon enable scientists to bridge the divide between mind and matter. Especially promising, he thought, were new techniques for recording the electrical impulses of brain cells. Whereas most researchers saw these tools as merely a way to describe how neurons communicate with each other, Kandel thought that by monitoring how their electrical chatter fluctuated over time, he might reveal how thoughts take shape.

Over the next four decades, Kandel succeeded in uncovering the biological roots of cognition. In a series of landmark studies on the California sea slug — a mollusk capable of simple feats of learning and memory — he and his colleagues discovered that the formation of short-term memories involves the strengthening of existing synaptic connections between neurons. The Columbia scientists then showed that forming long-term memories requires a cascade of genetic and molecular changes that trigger the growth of entirely new connections. To the surprise of many, Kandel’s group and others demonstrated that the same mechanisms underpin the cognitive processes of more advanced animals, including humans.

Kandel’s discovery that the brain transforms itself physically in the course of learning provided some of the strongest evidence yet for “neuroplasticity,” or the idea that the brain is ever-changing and highly adaptable. This insight, which earned Kandel a Nobel Prize in 2000, is now a defining principle of neuroscience.

global warming is surprisingly simple — so much so that by the late 1800s, European scientists were already predicting that the burning of fossil fuels would cause atmospheric CO2 levels to spike, trapping heat near the earth’s surface and baking the planet. The harder part was recognizing when the warming had actually begun and how fast it was happening, since that required first understanding the earth’s natural climate cycles.

Much of that understanding came from Columbia’s Lamont-Doherty Earth Observatory, where, beginning in the 1950s, oceanographers, geologists, and atmospheric scientists set out to investigate the deep rhythms of the earth’s climate. The first to grasp the meaning of their results was Wally Broecker ’53CC, ’58GSAS, a Columbia geophysicist who discovered how ocean circulation patterns influence global temperatures. In his landmark 1975 paper, “Climatic Change: Are We on the Brink of a Pronounced Global Warming?” he warned that the planet was about to heat up dangerously. Broecker observed that the earth was just emerging from a decades-long natural cooling cycle that had been obscuring the effects of rising industrial CO2. Once the cooling cycle ran its course, he predicted, temperatures would rise more sharply than is usual in a phase change. He was right: global temperatures began to climb at an unnatural rate the very next year.

have long striven to turn their findings into tools that people can use to adapt to a changing world. One of their biggest successes has been the development of seasonal forecasts that help communities anticipate weather patterns months in advance. Columbia researchers Mark Cane and Steve Zebiak created the first of these forecasts in the 1990s, following their discovery that El Niño — a periodic warming of Pacific Ocean waters that influences global weather — could be reliably modeled and predicted. That breakthrough led Columbia to launch the International Research Institute for Climate and Society (IRI), whose scientists issue monthly forecasts in dozens of countries and help people integrate them into everyday decision-making. Today, tens of millions of farmers use the forecasts to guide planting decisions, while humanitarian agencies rely on them to position emergency supplies in areas most likely to experience drought, floods, or other extreme events.

women in many parts of the world faced a greater risk of dying in childbirth than women in the US and Europe had fifty years earlier. To a group of Columbia researchers led by public-health dean Allan Rosenfield ’59VPS, that was a human-rights crisis with no end in sight. After years spent working in underserved regions, they believed that global health leaders were prioritizing the wrong solutions, building high-tech, Western-style hospitals in big cities while rural women lacked even basic care.

In response, in the early 2000s, the Mailman School of Public Health partnered with governments, NGOs, and local communities in more than a dozen countries to rethink maternal care from the ground up. Through its Averting Maternal Death and Disability (AMDD) program, the school trained nurses and midwives to spot complications early and equipped remote clinics to perform emergency procedures like C-sections. Columbia faculty also helped to expand the definition of who could provide such care if no obstetricians were available. These efforts helped set new international standards for maternal care and contributed to a 40 percent reduction in pregnancy-related deaths in low-income regions over two decades. In recent years, AMDD researchers have also exposed widespread mistreatment of women during childbirth throughout the world, helping to spark a global movement for respectful maternity care.

raw power and rational calculation — it’s also driven by world leaders’ cognitive biases and misreading of each other. This is the central insight of Robert Jervis, a Columbia political scientist who first applied psychological principles to the study of decision-making in matters of war, diplomacy, and nuclear deterrence. His scholarship, most notably his 1976 book Perception and Misperception in International Politics, influenced how a generation of foreign-policy experts viewed geopolitical competition and made the world a safer place. Washington policy analysts have for decades regularly invoked Jervis’s writings when advising officials in times of international tension, reminding them of the natural tendency to view adversaries as more aggressive than they really are, to resist changing our minds when confronted with new information, and to underestimate the importance of factors that we don’t control. Colin Kahl ’00SIPA, a national-security adviser in the administration of President Barack Obama ’83CC and undersecretary of defense for policy under President Joe Biden, said in a 2022 interview that there was “rarely a conversation” on national-security issues in the White House Situation Room when he and colleagues did not draw on Jervis’s work.

a mail-in ancestry test or had your genome analyzed to diagnose an illness, you’ve benefited from the work of Columbia biochemical engineer Jingyue Ju. In the 1990s, Ju and colleagues developed fluorescent imaging techniques for DNA sequencing, paving the way for the Human Genome Project. His laboratory at Columbia has since made further advances that power modern genomic research and precision medicine, making it possible to decode an entire human genome on a credit-card-sized chip for under $1,000. These technologies enable scientists to tailor cancer treatments based on tumor genetics, identify rare inherited diseases in children, and track the spread of viruses. Ju’s team is now developing even faster, cheaper, and more accurate DNA-sequencing tools, which he says could make genetic analyses as common as blood tests. “Doctors will then be able to spot diseases in their earliest stages,” he says, “and treat them before symptoms arise.”

many scientists thought that humanity had conquered the microbial world and that infectious diseases would soon be eliminated by our growing arsenal of vaccines and antibiotics. Stephen S. Morse, an epidemiologist at the Mailman School, was among the first to recognize that the germs were mounting a comeback. His landmark book Emerging Viruses alerted the scientific community to the fact that growing numbers of infectious diseases were popping up every year, driven by human encroachment into wildlife habitats. And when zoonotic viruses did make the leap into humans, they were spreading farther and faster than ever before, largely because of global travel. In the 2000s, the Mailman School established itself as a hub of research aimed at combating future pandemics, with Morse, fellow epidemiologist W. Ian Lipkin, and others launching international efforts to improve early-warning systems and containment strategies. From 2009 to 2014, Morse helped lead USAID’s Predict program, which developed a global surveillance system anchored in countries with large zoonotic reservoirs. Lipkin, as director of Mailman’s Center for Infection and Immunity, is now widely known as the world’s top virus hunter. Hopscotching the globe to detect novel diseases and contain outbreaks, he and his colleagues have discovered or helped identify more than 2,500 infectious agents. A few of them — West Nile virus, SARS, MERS, Zika virus — you may have heard of. But many you haven’t, because the Columbia scientists stamped them out before they could spread.

product on the market today owes something to computational advances from Columbia researchers. Smartphone calls, as well as Zoom and FaceTime video chats, run on Voice over Internet Protocol (VoIP) technology, which is powered by algorithms developed by Columbia computer scientist Henning Schulzrinne. High-quality video streaming, meanwhile, is dependent on MPEG-2 and AVC/H.274 compression standards that Columbia electrical engineer Dimitris Anastassiou helped to develop. And if you’re worried about hackers, you’re likely already benefiting from digital safeguards inspired by the work of Columbia computer scientist Salvatore Stolfo, whose work in intrusion detection helped to lay the foundation for security systems built into countless connected devices.

in the US, with tens of thousands of bridges and dams showing signs of serious wear. But identifying which ones need to be replaced and which can get by with patchwork repairs is notoriously difficult. Columbia civil engineers Andrew Smyth, Raimondo Betti, Adrian Brügger ’04SEAS, ’17SEAS, and George Deodatis ’87SEAS are helping to solve this puzzle. All have designed sophisticated methods of real-time infrastructure monitoring, often using remote-sensing networks, to estimate the risk that a bridge could buckle, a dam could crack, or a building could collapse. Working with public agencies in the New York City area, they’ve helped retrofit several major suspension bridges to make them more resilient to threats like hurricanes and earthquakes. Their techniques are now used by engineers around the world to observe everything from skyscrapers to tunnels.

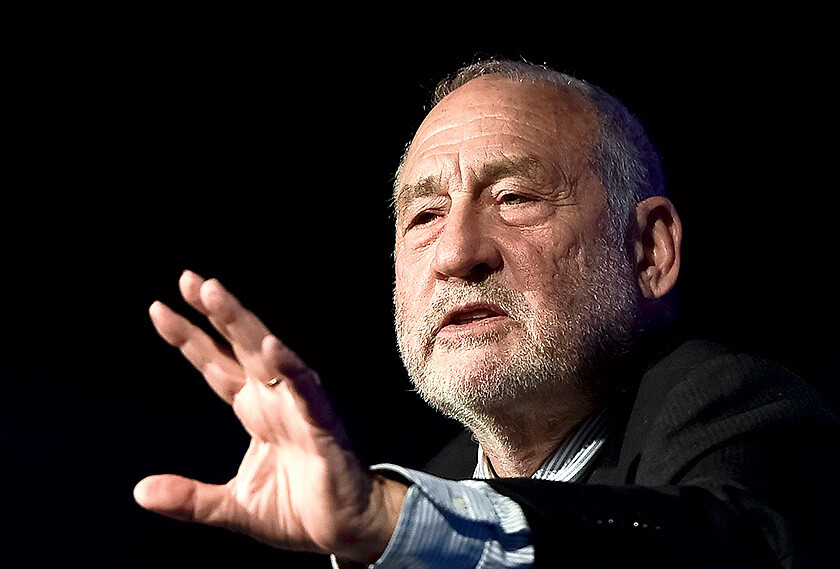

of national prosperity. It rewards wasteful and even harmful economic activity. And it fails to account for health, education, inequality, and the environment. These criticisms have been leveled against the gross domestic product many times since its widespread adoption in the mid-twentieth century. But the hits never landed as hard as when they came from Columbia’s Nobel Prize–winning economist Joseph Stiglitz, who fifteen years ago used his stature as a former World Bank chief economist to launch a global movement challenging GDP’s supremacy. Heading up several international commissions on the topic, Stiglitz published influential reports outlining how nations could do better. He urged governments to promote dialogue about what truly matters to people, to develop new metrics that reflect those values, and to integrate the results into budgetary planning. Countries like New Zealand, Scotland, Wales, and Finland have since begun to implement such changes, and the European Union is now advancing proposals to incorporate a range of new well-being indicators into policymaking.

as inert bits of information that determine whether our bodies run smoothly or fall prey to disease. But when Thomas Hunt Morgan and his Columbia students launched the world’s first genetic studies more than a century ago, they were actually probing a deeper mystery: how do cells know how to assemble themselves into the diverse tissues that make up a whole organism?

Over time, scientists have learned that genes are only part of the answer, since they are continuously switching on and off in response to cues in a cell’s environment — cues that ultimately guide a cell’s development and role. Gordana Vunjak-Novakovic, a Columbia biomedical engineer, has done as much as any living scientist to uncover how cells are shaped by their surroundings and to harness that knowledge for therapeutic purposes. In her laboratory, she designs small biochambers that mimic the conditions found in different parts of the human body, right down to temperature, oxygen, nutrients, electrical activity, motion, and physical compression. She then seeds the containers with adult stem cells harvested from a patient. After a few weeks, a piece of living bone, heart, liver, or lung tissue — of any desired shape or size, and a perfect genetic match to the donor — is removed. The goal is to transplant the new tissues into an injured or ill person’s body like spare parts. In 2023, the first clinical trial using Vunjak-Novakovic’s methods was successfully completed, providing new facial bones to people who had been disfigured in car crashes or suffered bone degeneration. Other scientists are now using her lab-grown tissues to study how patients will respond to drugs and how cancer spreads between organs. Like so many Columbia breakthroughs over the past century, those in Vunjak-Novakovic's lab point the way toward more.

Illustrations by Rob Wilson