Peer into the human skull, probe the brain’s tofu-like texture, and there, in that microscopic terrain, the neurons exist, nearly infinitesimal. Fifty of them would fit on the period at the end of this sentence. Most form before birth and stay with us until death, although some, due to disease or disuse, eventually shrink, slow down, or succumb. The brains of frogs hold sixteen million neuronal cells; fox terriers, one hundred sixty million. Yet the human brain, with its eighty-six billion neurons, still doesn’t house the most. The African elephant has three times as many, and blue whales likely have billions more, though no one is certain.

Individual neurons are not self-aware. They do not know what they are, where they are, or who you are. They do not think. Rather, they permit us to think. Like the frenzy within a pinball machine, the neurons fling directives back and forth, ceaselessly communicating and connecting with other nerve cells. These neuronal networks control every thought, feeling, sensation, and movement. They are the conduits that lead to consciousness; they make sense of our senses. Only because of them do our brains and bodies work. Minus the networks, our minds would be slush, gibberish. Phantasms would replace perceptions.

Each neuron typically links to thousands more, perhaps up to fifteen thousand more, drawing on the measliest of electrical currents (0.07 volts — an AA battery carries twenty times as much). Those currents, moving neuron to neuron, sprint through a phalanx of connectors, called synapses, at speeds up to almost three hundred miles per hour. Signals from the brain’s motor cortex, for example, rush through the central nervous system to the neuronal networks in the legs. Those electrical pokes regulate balance, direction, stride, and speed, along with dozens of other things; such is the abridged neurological backstory to taking a single step. By the end of adolescence, the neurons will have engineered five hundred trillion connections. Take those connections — from just one brain, mind you — string them along Interstate 95 somehow, and they would stretch from Columbia University to Columbia, South Carolina.

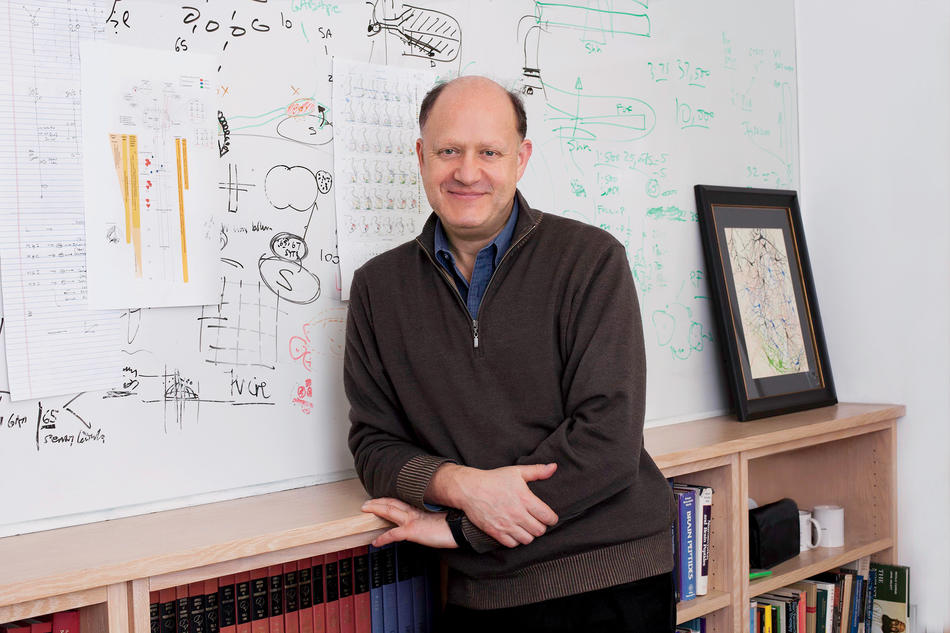

Neurons are colloquially called the brain’s “basic building blocks.” And we do know the basics about how individual neurons work. But fathoming how trillions of them talk across seventy-eight compartments of brain topography is a conundrum. And repairing flawed networks to conclusively cure brain disorders, like autism or Alzheimer’s, remains an enigma — looming, daunting, slow to undrape itself. “I don’t want to make it sound like we know nothing,” says Michael Shadlen, a professor of neuroscience at the Columbia University Herbert and Florence Irving Medical Center (CUMC). “But there are basic, basic phenomena that we know nothing about. Everything we discover provokes deeper questions.”

Wrapping one’s head around the human mind is very hard. Figuring yourself out always is. “The greatest scientific challenge we are now facing,” says Charles Zuker, professor of biochemistry, molecular biophysics, and neuroscience at CUMC, “is to understand the workings of the brain.”

The glass building awaits in West Harlem, at the intersection of Broadway and 129th Street, thirteen blocks north of the Morningside Heights campus gates. Overshadowing a space previously occupied by long-abandoned warehouses, it was the first structure erected on the school’s new seventeen-acre Manhattanville campus. A $250 million gift helped make it happen — from the Renzo Piano ’14HON design to the construction of the building’s more than fifty laboratories.

Dawn M. Greene ’08HON, the philanthropist, bestowed the gift in 2006. Her husband of nineteen years, Jerome L. Greene ’26CC, ’28LAW, ’83HON, was an attorney, real-estate developer, and billionaire; he graduated from Columbia Law School just before the Great Depression began. Over the next seventy-one years, he would give hundreds of millions of dollars to charitable causes. He died in 1999, age ninety-three, one of New York City’s most powerful figures. Even after death he endures: the name on the building is the Jerome L. Greene Science Center.

The building’s approximately eight hundred tenants will include scientists, principal investigators, lab managers, postdocs, graduate students, and staff from Columbia’s Mortimer B. Zuckerman Mind Brain Behavior Institute, itself relatively new. The institute was established in December 2012 with a $200 million gift from Zuckerman ’14HON, owner of the New York Daily News and chairman of U.S. News & World Report. Says Thomas M. Jessell, one of the Zuckerman Institute’s three codirectors and a Columbia professor of biochemistry, molecular biophysics, and neuroscience: “Our simple task now is to create the best institute for neural science in the US, and, arguably, in the world.”

The move into the Greene Science Center kickstarts that assignment. “Really great science is going to come from it,” says Shadlen, a Zuckerman Institute principal investigator. A place for “the collision of ideas,” as Jessell likes to say. Right now, the institute’s scientists are largely disconnected, geographically speaking; they’re spread across six buildings throughout the Morningside Heights and medical center campuses. “We’ve really been constrained, hindered, slowed down by all these labs that have similar interests, scattered all over,” says Randy Bruno, another Zuckerman Institute investigator.

Just as neurons need to commingle, apparently so do scientists. The stereotype of a lone researcher experiencing a eureka moment in a secluded little lab survives only as a science-fiction trope. In real life, discovery hardly ever happens that way. “These are complex problems, and we have not broken them,” says Richard Axel ’67CC, a Zuckerman Institute codirector and Columbia professor of biochemistry, molecular biophysics, pathology, and neuroscience. “The ability to understand will require looking at a problem through a multiplicity of eyes.” The relocation to the Greene Science Center collects researchers from more than twenty disciplines throughout Columbia: neuroscientists, data scientists, molecular biologists, stem-cell biologists, electrical engineers, biomedical engineers, psychologists, mathematicians, physicists, theorists, and model builders. “If you only talk to people who work on the exact same thing you work on, you probably don’t generate as many new ideas as you could,” says Bruno. “Getting together people with different expertise, very different research programs, but a common purpose of understanding the mind — yeah, that’s fabulous.”

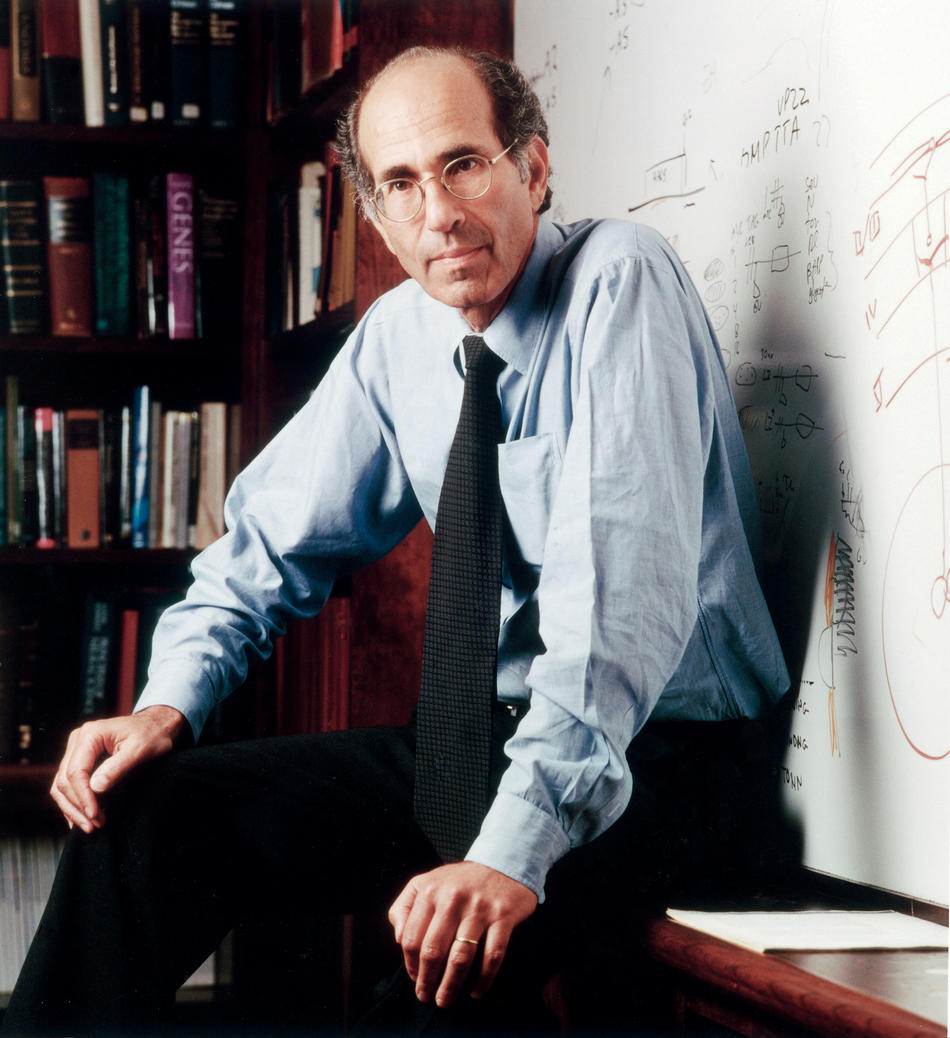

Scientists don’t necessarily put a premium on luck, but they do subscribe to serendipity — of which proximity is a catalyst. “Science is a completely social interaction,” says Eric Kandel, the third Zuckerman Institute codirector, and a professor of neuroscience, psychiatry, biochemistry, and biophysics at CUMC. “I met Richard Axel in the late seventies. He became interested in the brain and nervous system. I wanted to learn molecular biology. Axel knew nothing about the brain. I knew nothing about molecular biology. And so we started to collaborate. He moved full-time into the brain, and I became comfortable with molecular biology.” Since that collaboration began, both men have become Nobel laureates.

At the Greene Science Center, a neuroscientist could, and almost certainly will, run into an electrical engineer or stem-cell biologist in a hallway, engage in conversation, and — eureka, ideas collide — that brief exchange may kindle new research, which may lead to collaboration, and after years or decades, maybe a cure. Like neural connections, discovery happens for one reason. Someone gets excited.

Kandel, eighty-seven, has been at Columbia forty-three years. On his office desk sits Principles of Neural Science, a textbook he coauthored in 1981, and now in its fifth edition. This particular copy, hardly conspicuous, lays beneath his computer monitor and serves as a screen booster.

“Look, I’ve been in the field for sixty years,” says Kandel. “We’ve made a lot of progress. But we’re at the beginning.”

Back in 1952, when Kandel was an NYU medical student, science really, really didn’t know much about the brain: “We didn’t know how smell worked. How taste worked. We knew nothing about learning and memory and emotion.” During the fifties, says Kandel, the only major brain lab in New York City was Columbia’s. Even the word “neuroscience” wasn’t coined until 1962. He recollects the first annual meeting of the Society for Neuroscience in 1971; 1,400 scientists showed up. Today, more than thirty thousand from eighty countries attend. “And now you can’t walk down Broadway without running into a half dozen brain researchers,” Kandel says, half joking. He joined Columbia in 1973, but even then: “So little was known. Almost everything you learned was something new.”

That is still true today. “There are so many psychiatric and neurological diseases that we just don’t understand and don’t treat successfully,” says Kandel. “This is a phenomenal problem facing humanity.” Among the more common brain disorders: Parkinson’s, Huntington’s, Tourette’s, epilepsy, narcolepsy, depression, panic attacks, anxiety, ADHD, OCD, and PTSD (there are hundreds more). “You have to be an optimist in this field,” says Jessell. “It’s big and it’s complicated. It’ll take time to achieve satisfying answers to some of the bigger questions.”

But an imposing technological apparatus, which may help fast-track potential treatments, is arriving. One example: in the basement of the Greene Science Center will be an array of eighteen two-photon microscopes. With them, scientists will see neuronal communities talk to each other in real time; researchers will record those glinting images and replay them endlessly for study. Five years ago, none of this was possible. The amount of data generated by the two-photon is immense, even when the experiment is a simple one. Put a lab mouse on a treadmill, scan the neurons twinkling in its hippocampus for a half-hour — and a terabyte of information emerges, enough to keep Zuckerman Institute mathematicians and statisticians decoding for weeks.

Two-photon microscopy is state-of-the-art, but perhaps only for the moment. Fortified with a $1.8 million grant from the National Institutes of Health, Zuckerman Institute principal investigator and biomedical engineer Elizabeth Hillman is developing SCAPE, a microscope that widens the view from small neuronal groups to whole brains. “With SCAPE, we can see the entire brain of an adult fruit fly in real time as it walks, crawls, even as it makes decisions,” says Hillman; SCAPE’s three-dimensional images generate ten to one hundred times faster than the two-photon. “This advance,” says Jessell, could “unlock the secrets of brain activity in ways barely imaginable a few years ago.”

And it could lead to cures. Already, researchers routinely manipulate individual neurons with electronic nudges, and can even turn off the genes inside a fruit fly’s motor neurons (a nifty trick, given that a fruit fly’s entire brain is barely bigger than the tip of a toothpick). Now, after shutting off the relevant genes, scientists may use SCAPE to look for the fly’s motor impairments, identify its faltering genes — then (one day) map the results onto the counterpart human genes. Somewhere therein could be clues to curing ALS, a grim and currently irreversible motor-neuron disease. “Science goes schlepping along,” says Zuker. “Then breakthroughs come that let you jump the steps. You go boom, you jump — boom, you jump — and a mega-barrier is lifted. How soon can discoveries be brought to patients? I cannot tell you. But we are far closer than we were before.”

Even with extraordinary tech advances, basic research — the day-to-day slog work — is indispensable. Without it, scientists will never unleash the miracle treatments awaited by millions. “You can’t fix a car if you don’t know what’s under the hood,” says Rudy Behnia, a CUMC assistant professor of neuroscience and a principal investigator at the Zuckerman Institute. “To cure problems of the brain, we first need to understand it.” By gradually mapping those trillions of neuronal circuits — by looking under the hood — Columbia scientists will eventually grasp how the engine runs; effective treatments for neurological and psychiatric diseases will ultimately follow. And that, really, is the crux of the institute’s mission. “Understand first how the normal brain works, and then you have a much better chance of assessing how abnormalities arise,” says Jessell.

That is where the slog work comes in. Cultivating stem cells in a petri dish, then tweaking them so they’ll morph into certain kinds of neurons, is a comparatively modest enterprise, but often takes months. Learning how to record the neural activity in a mouse brain could require years. And a grad student within any of the neuroscience disciplines could spend more than a half decade exhaustively scrutinizing what appear to be minutiae. “There’s a lot of labor pain in science,” says Behnia.

Frequently, the basic research goes nowhere. Science, seldom a linear excursion, typically sputters ahead in spasms and is routinely cratered with crash landings and wipeouts. “You put a lot of time and effort into something, and you have to be OK with it not giving you anything,” says Behnia. “It happens to everyone. You have to let it go and start all over. It’s hard. You learn through your failures. But nothing really fails, because you learn what doesn’t work.” The converse is also true. As Jessell says to every last one of his graduate students: “You’ll probably discover something no one else in the history of mankind ever realized. It may not be a big thing. But if you enjoy the clarity that arises from small discoveries, then you’re attuned to being a scientist.”

Those “small discoveries” may someday lead to cures, and perhaps sooner than you might think. “These may be the early days,” says Bruno. “But some of the most fundamental discoveries will be made in the early days.”

Sarah Woolley, a Columbia professor of psychology and a Zuckerman Institute principal investigator, has been studying songbirds for more than twenty years. Take the zebra finch, for example, one of five thousand species of songbird and one of the few that sing only one song. “They breed in the lab,” she says. “They sing, they court, they mate for life, they make a nest, they raise babies, all in the lab.”

What attracts Woolley is the singing part — and the similarities between how songbirds and people learn to vocalize. That’s something almost no other animal does: just humans, parrots, hummingbirds, dolphins (probably), bats (maybe), and songbirds. “An ape does not learn to vocalize,” says Woolley. Dogs don’t learn to bark, and cats don’t learn to purr either. Those sounds surely convey a message — a monkey shrieks to let its troop know a snake is coming. “But that’s not learned,” says Woolley. “Those are calls built into the brain.”

A baby zebra finch, however, learns to sing by listening to its father. That’s pretty much the way people learn to speak; infants access language by listening to and socializing with their parents — or whoever’s around them the most. Sure enough, when Woolley slips a baby zebra finch into the nest of another species (the Bengalese finch), the baby learns the foster dad’s song. “That shows the power of live social interactions for baby birds to learn how to communicate,” she says. In both humans and songbirds, Woolley theorizes, a set of neurons in the brain rouse a distinct kind of learning, one stimulated by social relationships. Those neurons, she suggests, “may send signals that say, ‘OK, learn this, this is important, this matters.’”

Now the kicker. Woolley suspects those corresponding neurons in humans somehow malfunction in autistic children. For them, acquiring language is often an enormous obstacle. “Maybe the signals that say ‘learn’ do not go to the auditory system or the brain circuits that form memory,” she says. What is known: sensory processing is glitchy in autistic kids. A touch on the shoulder may repel them, a direct look might make them shudder, and a loud sound is often excruciating. No wonder so many of them avoid social interactions. Bonding may induce learning, but if bonding is painful, then so is learning — and it doesn’t happen. “But if we can figure out in our birds what makes their brains able to learn based on social interactions,” says Woolley, “then we might be able to find ways to help the autistic brain.”

In some ways, human brains and bird brains are unnervingly alike. “As we study the auditory cortex of the zebra finch, we find similarity after similarity after similarity after similarity,” Woolley says. If she can identify those neurons in the baby zebra finch, then Woolley can predict the approximate location of the comparable human neurons. “I can map my bird’s neurons onto a mammal’s neurons,” she says, “and thus onto a human’s neurons.” Someday, Woolley’s displaced baby songbirds might help millions of autistic children reconnect.

For decades, scientists readily swallowed the notion that a “taste map” partitions your tongue — sweet at the front, salty at the sides. “It’s all incorrect,” says Charles Zuker, the Columbia neuroscientist, who has spent the last fifteen years studying how we perceive taste. “There’s no taste map.” Instead, he says, thousands of taste buds are scattered around your tongue, with sweet, salty, sour, bitter, and umami receptor cells throughout.

Nor do our taste buds actually decide how the food tastes. They do the detection work, definitely, but they serve primarily as relays, dispatching signals directly to the brain. “Sweet taste cells in the tongue talk to sweet neurons,” says Zuker. “Salty to salty. Bitter to bitter.” Within those micro-groups of neuronal constellations, the taste is given a definition. That’s how you know the difference between strudel and sauerkraut.

When humans are hungry, or thirsty, those neurons will ping us to eat something, or to get a glass of water. “Evolution is smart. Clean, clear, and simple,” says Zuker. “This is what innate hardwired circuits are all about.” Now Zuker and his lab of twenty-two researchers want to map precisely where the taste and thirst neurons are located in the human brain. Finding them could lead to clues in controlling our cravings. In research with mice, Zuker’s team shined a fiber-optic light over their thirst neurons. The mice instantly sprinted to the water spout. “Even if the mouse is not thirsty, the mouse will think it’s thirsty, and look for water to drink,” he says. “Isn’t that remarkable?”

The same seems to apply to taste. The messages from the mouse tongue travel directly to its taste neurons. Just as in humans, those nerve cells are dedicated strictly to the five basic taste qualities. Activate the bitter neurons while a mouse drinks regular water, and it’s repelled. (The mouse squints, shudders, and jiggles its head, just like someone who bit into a lemon.) But silence the bitter neurons, and the mouse will slurp bitter liquid.

The inferences are dumbfounding. Could physicians someday manipulate neurons to regulate diet, consumption, and sugar cravings — perhaps with a pill? “There are amazing implications,” says Zuker. “I think the field is poised to do something very special.” Then, reining it in: “There are challenges — making sure [a pill] acts on the right group of cells, that it targets the right circuit.” And a reminder: “We are still doing basic neuroscience. We are still at the stage of uncovering fundamental logic and principles.” Yet from his lab’s ever-accumulating data, one can extrapolate the prospective human applications — controlling anorexia, obesity, and diabetes.

More than one-third of adult Americans today are obese, and at increased risk for heart disease, stroke, and cancer. Thirty million Americans have diabetes, and three hundred thousand die from it annually. Overeating and excessive sugar consumption are the causes of both obesity and diabetes. Finding a way to govern them with pharmaceuticals would be a miracle. “And now we can begin to ask,” says Zuker, “if we can control feeding and sugar craving to make a meaningful difference. I believe the answer will be yes.”

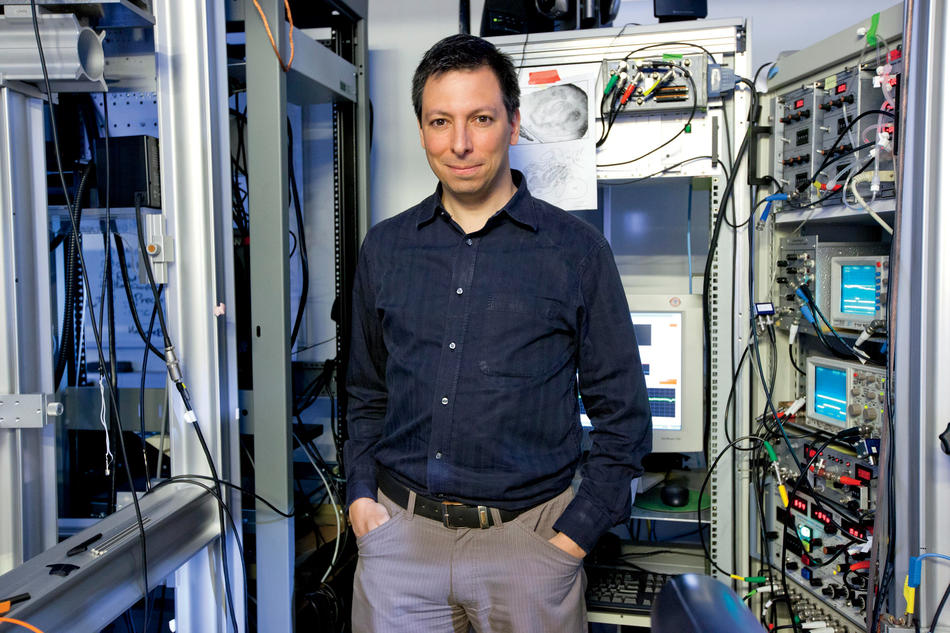

The decades-old “left brain–right brain” paradigm, although not completely discarded by researchers, now survives considerably diminished, a moldy scientific chestnut (left-brainers, supposedly, are analytical and good at math; right-brainers, emotional and hyper-imaginative). “There is some truth to it,” says Randy Bruno, a CUMC associate professor of neuroscience. “But not all functions are completely one side or another. Some things are not lateralized at all.” Instead, Bruno’s research reveals something much more tantalizing: “What we’re working on now is top brain and bottom brain.”

For more than twenty years, Bruno has been investigating the cerebral cortex, an outer sliver of brain barely thicker than a credit card and critical for higher-order functions like perception and attention. In mammals, the cortex envelops nearly the entire organ, and divides into “upper” and “deeper” layers. Our deeper layers, evolutionarily older, faintly evoke the reptilian brain. Indeed, today’s alligators, turtles, and snakes have only the lower layers. “There’s a really good reason for why mammals developed the upper layers,” says Bruno. “But I don’t know what the answer is.”

Neuroscientists long assumed the upper cortex transmitted its sensory data — that’s everything you see, hear, smell, taste, and feel — directly to the deeper cortex. Without that, researchers believed, the lower region in mammals would never detect an outside world. But in 2013, Bruno and his team shut off the upper cortex in a mouse. What happened (or what didn’t happen) startled everyone, not just in Bruno’s lab, but in the scientific community worldwide.

“Nothing changed,” he says. Turns out the deep layers weren’t relying on the upper cortex at all; they still received the incoming sensory information. The two cortex regions, Bruno discovered, can operate independently of each other. Independent yet intertwined: “They do work together,” he says. “But they also look like they have different jobs. What’s the job of this half of the cortex versus the other half? I don’t know.”

But he already has a hypothesis. Perhaps, Bruno says, the upper layers mediate “context-dependent” behaviors, and make sense of intermingling and often conflicting situations. (A rabbit is hungry. It sees wildflowers nearby. But a hawk hovers overhead. Does the rabbit chance it and go for the wildflowers? Or take off and go hungry?) The computations performed in the upper layers, suggests Bruno, are good at evaluating conflicting data in context. They decide what to do.

If that’s so, then another theory, even more provocative, surfaces. Psychiatric patients often have problems making decisions that involve context. “Schizophrenics are an example,” says Bruno. “Interpreting sensory signals in context is difficult for them. They really struggle with it. They can’t deal with it.” Which raises the question: could the malfunctioning neuronal networks that cause schizophrenia and other psychiatric disorders reside somewhere in the upper layers?

Determining that — the approximate vicinity of the faulty networks — is huge. “We would know where to start looking,” says Bruno. “We could narrow down the places where the actual biological defect is occurring.” If researchers could then pinpoint those dysfunctional neurons and target them with drugs, effective treatments for psychological disease could eventually result.

Lots of ifs. “Until we finish the science, that part is still science fiction,” Bruno says. “But that’s the hope, right?”

Nearly no one knows this about the nose, but “most odors,” says Richard Axel, “do not elicit any behavioral responses without learning or experience.” That means your reaction to smell is tightly twined to memory. Whether garlic or gasoline, cologne or coffee, just-cut grass or just-smoked grass, your brain, not your nose, determines if you like or loathe the smell. Aromas transport you to memories that your brain has catalogued as pleasant or unpleasant; you respond accordingly. This is the core of Axel’s current research. “We are interested in how meaning is imposed on odor,” he says.

Already, at literally a neuronal level of detail, Axel has essentially explained why we can smell; he has identified more than one thousand receptor cells in the nose that talk to the olfactory bulb, the brain’s first relay station for smell. There, the odors are fine-tuned, processed, and propelled to other parts of the brain (“to at least five higher olfactory centers,” he says). For his seminal mapping of the smell system’s molecular bedrock, Axel won the 2004 Nobel Prize in Physiology or Medicine.

“A given odor will call forth different experiences and produce different emotional responses for different individuals,” he says. Those flexible behavioral responses — fashioned between the olfactory bulb and the hippocampus, where we collect memories — are more robust with smell than with any of the other four senses. Even the fragrance of jasmine, supposedly the most sensual of scents, is contingent on context, says Axel. Breathe it in while spending an evening with someone you love. “Then jasmine would elicit a very pleasant response,” he says. But that could change, based on new experience: “Suppose that same person turned around and hurt you seriously. Then jasmine no longer will have the same effect on you.”

In simpler brains, many aromas provoke an instinctive and unalterable response. When mice get a whiff of fox urine, their hardwired neural pathway sends them running. “That’s because mice, for a long time, have been prey to foxes,” says Axel. Only a few scents, however, are hardwired in humans. Smoke, probably, is one. Anything rotting is another (the stench of sulfur, akin to rotten eggs, is revolting to most everybody). But that’s about it. Says Axel: “It’s very hard to conjure up odors that elicit innate responses in people.”

After four decades of foundational work, Axel recognizes the connection between his fundamental research and the furthermost cures. Discovering what’s under the hood could help clarify the latest curiosity about Alzheimer’s: for many patients, an early symptom is losing their sense of smell. “What can emerge from an experiment designed to understand one aspect of science can open up something more profound,” he says. “You go in, not knowing what is going to come out.”

In 1962, Eric Kandel commenced research on Aplysia — the sea hare — a blobby mollusk with protruding feelers that resemble rabbit ears. Studying sea hares, friends and colleagues warned, was a calamitous blunder; fifty-four years later, Kandel remembers their disapproval. “Everyone thought I was throwing my career away,” he says. But Aplysia, with only twenty thousand neurons in its central nervous system, became Kandel’s odd little portal into the human brain: “It has the largest nerve cells in the animal kingdom. You can see them with the naked eye. They’re gigantic. They’re beautiful.” Four decades later, Kandel won the 2000 Nobel Prize for Physiology or Medicine. He had discovered how those neurons in Aplysia’s brain constructed and catalogued memories.

Today, as neuroscientists worldwide pursue remedies for Alzheimer’s and age-related memory loss, Kandel’s half century of findings are considered indispensable. Substantive therapies for Alzheimer’s in particular are “poised for success,” says Jessell, a colleague of Kandel’s for thirty-five years. “We’re on the cusp of making a difference.” But accompanying that claim is a caveat; the fledgling remedies are not panaceas. “We’re not necessarily talking about curing the disease,” he says. “But we are talking about slowing the symptomatic progression of the disease so significantly that lifestyles are improved in a dramatic way. If in ten years we have not made significant progress, if we are not slowing the progression of Alzheimer’s, then we have to look very seriously at ourselves and ask, ‘What went wrong?’”

Breakthroughs could happen sooner, however. Some of the Alzheimer’s medications available now “probably work,” says Kandel, except for one obstacle: “By the time patients see a physician, they’ve had the disease for ten years. They’ve lost so many nerve cells, there’s nothing you can do for them.” Possibly, with earlier detection, “those same drugs might be effective.” That’s not a certainty, insists Kandel, only a “hunch.”

Years ago, Kandel had another hunch — that age-related memory loss was not just early-stage Alzheimer’s, as many neuroscientists believed, but an altogether separate disease. After all, not everyone gets Alzheimer’s, but “practically everyone,” says Kandel, loses some aspects of memory as they get older. And MRI images of patients with age-related memory loss, as demonstrated by CUMC neurology professor Scott Small ’92PS, have revealed defects in a brain region different from those of the early-stage Alzheimer’s patients.

Kandel also knew mice didn’t get Alzheimer’s. He wondered if they got age-related memory loss. If they did, that would be another sign the disorders were different. His lab soon demonstrated that mice, which typically have a two-year lifespan, do exhibit a significant decrease in memory at twelve months. With that revelation, Kandel and others deduced Alzheimer’s and age-related memory loss are distinct, unconnected diseases.

Then Kandel’s lab (again, with assistance from Small) discovered that RbAp48 — a protein abundant in mice and men — was a central chemical cog in regulating memory loss. A deficit of RbAp48 apparently accelerates the decline. Knocking out RbAp48, even in a young mouse brain, produces age-related memory loss. But restoring RbAp48 to an old mouse brain reverses it.

Now what may be the eureka moment — this from Gerard Karsenty, chairman of CUMC’s department of genetics and development: bones release a hormone called osteocalcin. And Kandel later found that osteocalcin, upon release, increases the level of RbAp48.

“So give osteocalcin to an old mouse, and boom! Age-related memory loss goes away.”

The same may prove true in humans. A pill or injectable could work, says Kandel: “Osteocalcin in a form people can take is something very doable and not very far away.” In less than a decade, age-related memory loss might be treatable. “This,” he says, “is the hope.”

As the ambitions of neuroscientists accelerate, the field has moved its goalposts to a faraway place. “We’re trying to understand behavior,” says Bruno. “Behavior is not straightforward. It’s an incredibly ill-defined problem.”

Behavior encompasses everything. Perception, emotion, memory, cognition, invention, obsession, infatuation, creativity, happiness, despair. To completely understand how the brain governs behavior, to neurologically plumb the wisps of human thought, one must unshroud innumerable obscurities at the subcellular level. “How do you define happiness or beauty? Somehow it’s based on connections in the brain,” says Jessell. Always, it gets back to the ever-pinging networks: “Without knowing the links between these eighty-six billion neurons that exist within the human brain, we don’t have a hope of understanding any aspect of human behavior.”

Decipher those links, and we will have figured out how we figure things out. How brain connections, for instance, ignite love connections. We could, conceivably, fathom ourselves practically down to the last neuron. Says Axel: “Do we understand perception, emotion, memory, cognition? No. But we’re developing technology which might allow us entry into these arenas for the first time. Perhaps we will get there. Perhaps.”

“We are tackling infinity,” says Bruno. “Behavior is this infinite space of ideas. Oh, probably not truly infinite. We’re finite beings. Only so many neurons are in our heads. But think about all you can do, and the vast realm of possibilities you can react to. Think about how large a set that is. I mean, you can’t count all of those things. The range of human possibility is staggering.”

Acknowledge this, and one could easily argue we’ve barely begun to know the brain. “We really are at the very beginning,” says Bruno. “How far along? I’d say 5 percent. We’re trying to tackle a collection of problems and questions that put us on the 5 percent end.”

Learn the rest — the remaining 95 percent — and we will quite literally understand ourselves. But this will take time. “We have a very fragile understanding of the principles by which these things work,” says Jessell. “I think we have at least fifty years before we can explain every aspect of human behavior.” Or possibly longer. Says Kandel: “On this — to have a satisfying understanding of the brain — I think we’re a century away.”

So little is known. Almost everything we learn is something new. What now? Tackling infinity, of course. “What could be more important,” says Axel, “than to understand the most elusive, the most complex, the most mysterious structure that we know of in our universe? That’s pretty damn important.” The answers, always within, now lie ahead.